New Google Search Console Robots.txt Report Replaced Robots.txt Tester Tool

Google announced a new robots.txt report within Google Search Console and at the same time said it will sunset the old robots.txt tester tool. The new tool shows which robots.txt files Google found for the top 20 hosts on your site, the last time they were crawled, and any warnings or errors encountered.

Google also said on Twitter, "We're also making relevant info available from the Page indexing report. As part of this update, we're sunsetting the robots.txt tester."

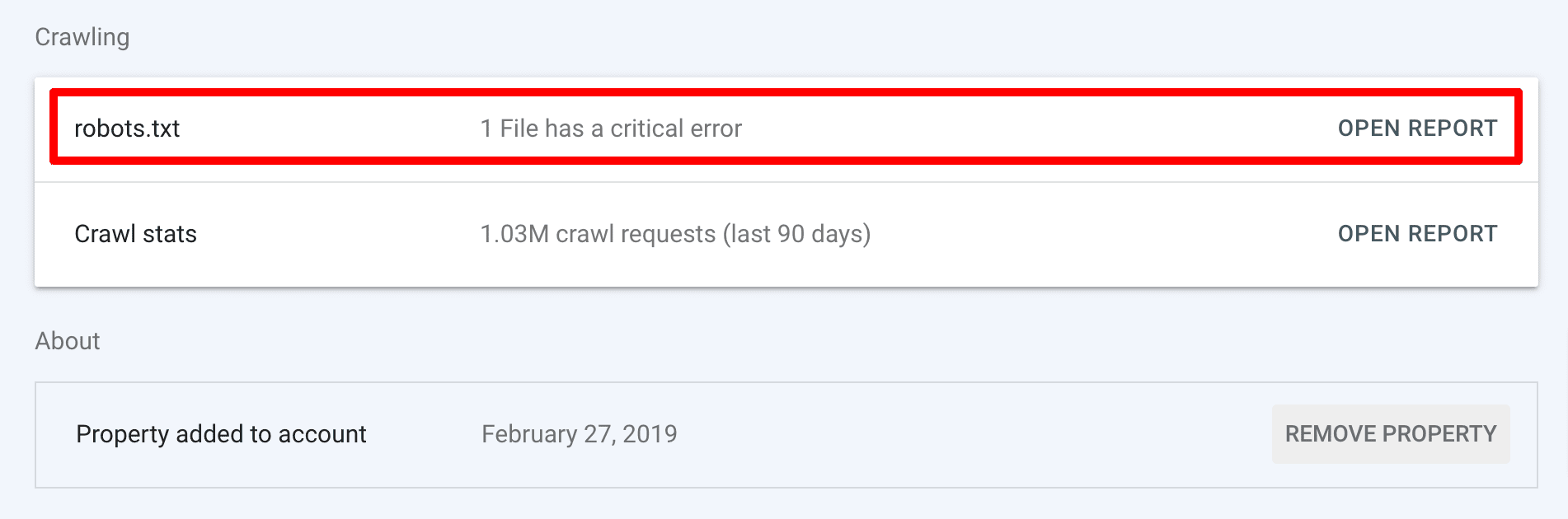

To access this, go to the settings link for your Search Console profile or click here. This is what it looks like:

Here is what the report looks like for this site, yes, I have a critical error. Here are those details and note, you can request recrawl of your robots.txt file:

This help document goes through a lot more about this report.

Some are not happy that the robots.txt tester tool is gone but Bing still has one:

Google just killed its robots.txt tester. Now, to test if a URL is allowed via robots.txt you can:1) Perform a "Live Test" via the URL Inspection Tool2) Use a 3rd-party testerThe URL Inspection Tool doesn't provide the same info as the old tool, so you likely want to do both https://t.co/lfPCGmfZ44 pic.twitter.com/SglKWf5zp0— Cyrus SEO (@CyrusShepard) November 15, 2023

I love the new robots.txt reporting (it's in settings btw), but removing the robots.txt Tester? I love that tool and it can be super-helpful. There are other tools that do similar things, including one from Bing, but I'd love to see that stay in GSC. :) https://t.co/hMIJQjfpQV

— Glenn Gabe (@glenngabe) November 15, 2023

Follow Us Our Official Blog: Google SEO Official News

0 Comments